If you are running an AWS EKS cluster, there is a good chance you use the amazon-vpc-cni-k8s plugin for managing pod networking using Elastic Network Interfaces on AWS. There is also a very good chance that you use vpc-cni with the default configuration, which is usually fine in most cases.

However, I ran into a situation where the worker nodes subnets kept running dangerously low on IPs. This was hard to explain at first – I had configured very generous /21 for the worker node subnets.

I also could not blame this problem on a high number of worker nodes – there were never enough nodes (ec2 instances) or pods to explain why so many IP addresses were consumed.

The EKS cluster in question was used to host Gitlab Kubernetes runners. Examining the usage pattern started to offer some clues about why this was happening:

- Gitlab runner pods were launched in response to pending jobs

- The runner pods were scheduled on worker nodes (ec2)

- The gitlab jobs were configured with genrous cpu/mem requests, so that only 1-2 jobs could run on a single worker node (strange errors happen when jobs are starved of resources)

- Once the job was finished running, the worker node was scaled down by cluster autoscaler

At peak working hours we had a high ec2 churn rate – meaning ec2 instances were started and terminated within the span of the average gitlab job duration, roughly between 10-15 minutes. Sometimes the nodes were reused for other Gitlab jobs, but often a Gitlab pipeline would start a large number of jobs in parallel (e.g., unit tests on multiple branches) which caused a sudden increase in nodes.

Many of these gitlab jobs were resource hungry – doing builds and tests – and therefore required larger instance types.

The next step was to have a deeper look at the aws-vpc-cni documentation, to understand more how IP addresses are used. Sure enough, I found out some interesting things.

Default Behavior of AWS VPC CNI

Each node in an EKS cluster runs an ipamd Deamonset pod named aws-node. This software is responsible for creating ENIs, allocating IPs, and attaching ENIs to ec2s.

❯ kubectl get pods -n kube-system -l app.kubernetes.io/name=aws-node

NAME READY STATUS RESTARTS AGE

aws-node-22vsd 2/2 Running 0 20d

aws-node-26lc5 2/2 Running 0 14h

aws-node-2bsp2 2/2 Running 0 15h

aws-node-2cs4b 2/2 Running 0 15h

aws-node-2f4tr 2/2 Running 0 20d

aws-node-2jzll 2/2 Running 0 11hThere are 3 important configuration variables that control behavior. You can read more about these parameters on the project’s github docs.

WARM_ENI_TARGET (default: 1)

The number of warm ENIs to be maintained.

A “warm” ENI is an additional network interface (called Secondary ENIs) attached to a node containing enough reserved IPs to fill up the ENI. This ENI is added to a node, but not yet actively used. In other words, none of the IP addresses attached to the secondary ENI have been assigned to any pods (the significance of this will be explained soon).

This means the default value WARM_ENI_TARGET=1 will result in an extra secondary “full ENI” of available IPs reserved, beyond the IPs allocated to the primary ENI when a worker node first joins the cluster [source].

What this means is, with the default settings, the primary and secondary ENIs will be allocated with the maximum amount of IPs. For example, with a t3.2xlarge instance type that supports 15 IPs and 4 ENIs, the default setting of WARM_ENI_TARGET=1 will allocate 30 IPs.

Having an extra ENI pre-allocated with IPs allows for new pods to be started faster after the primary ENI becomes fully used. It also reduces the number AWS API calls as new pods are started, reducing the risk of api rate-limiting. However, as I will explain, this default configuration can be wasteful in some cases, since the warm ENIs still consume IP addresses from the CIDR of your VPC.

WARM_IP_TARGET (default: max number of IPs per ENI)

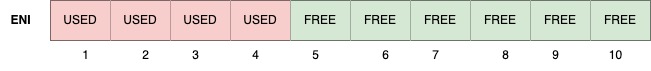

The number of warm (unassigned) IP addresses to be maintained on the currently active ENI.

A warm IP is available on an actively attached ENI, but not been assigned to a Pod. The warm IP “target” is the number of free IP “slots” to maintain on an ENI before an additional ENI is required. A new ENI will be added to the node when the number of free IP slots on the active ENI falls below the warm ip target.

In the diagram above, slots 5-10 would be considered warm IPs – IPs are attached, but still available (free) to assign to new pods. WARM_IP_TARGET is the desired number of “free” slots to maintain at all times. For example, if WARM_IP_TARGET=6, and in the example above another pod was launched, IP slots 1-6 would then be “used”, leaving only 5 “free” slots (6-10). This will situation will trigger a new ENI to be attached.

By default, WARM_IP_TARGET is not set – ipamd will default to using the max number of IPs per ENI for that instance type as the WARM_IP_TARGET. This means that a new ENI attachment will be triggered as soon as 1 pod is started and assigned an IP, since the number of “warm” IPs is now less than the “max number of IPs per ENI”. When you set WARM_IP_TARGET, you are overriding this default behavior.

I’ll provide an example later when I talk about how I tuned this setting.

MINIMUM_IP_TARGET (default: value of WARM_IP_TARGET)

The minimum number of IP addresses to be allocated at any time.

This is commonly used to front-load the assignment of multiple ENIs at instance launch.

When it is not set, it will default to 0, which causes WARM_IP_TARGET settings to be the only settings considered [source]. Going back to our discussion of WARM_IP_TARGET, this means by default, enough IPs to fill all the slots on the primary ENI will be reserved.

These defaults are designed to reduce the the number of EC2 API calls that ipamd has to do to attach and detach IPs to the instance. If the number of calls gets too high, they will get throttled and no new ENIs or IPs can be attached to any instance in the cluster. Also worth noting is that creating and attaching a new ENI to a node can take up to 10 seconds [source].

Max IPs Determined by Instance Type

The number of maximum IPs that can be attached to an ec2 instance is determined by the instance type. Instance types can differ in the number of ENIs and the number of IPv4 addresses per ENI supported.

You can get more information about the ENIs and IPv4 addresses per instance type in the AWS documentation Available IPs Per ENI.

This information is also available with the aws cli:

aws ec2 describe-instance-types --filters "Name=instance-type,Values=c5.*" --query "InstanceTypes[].{Type: InstanceType, MaxENI: NetworkInfo.MaximumNetworkInterfaces, IPv4addr: NetworkInfo.Ipv4AddressesPerInterface}" --output table

---------------------------------------

| DescribeInstanceTypes |

+----------+----------+---------------+

| IPv4addr | MaxENI | Type |

+----------+----------+---------------+

| 30 | 8 | c5.4xlarge |

| 50 | 15 | c5.24xlarge |

| 15 | 4 | c5.xlarge |

| 30 | 8 | c5.12xlarge |

| 10 | 3 | c5.large |

| 15 | 4 | c5.2xlarge |

| 50 | 15 | c5.metal |

| 30 | 8 | c5.9xlarge |

| 50 | 15 | c5.18xlarge |

+----------+----------+---------------+As you can see from the chart above, an xlarge instance type can support 15 addresses per interface. If you have a node that is only running 1 or 2 pods, you can already begin to see how pre-allocating 2 full ENIs can be wasteful.

So what is the maximum amount of IPs that can be used on an instance (without using IP prefixes) ?

We can use the formula:

# of ENI * (# of IPv4 per ENI - 1) + 2The first IP on each ENI is not available for pods. Pods that use host-networking (AWS CNI and kube-proxy) also don’t count in the calculation of max pods. There is also a document containing the max IPs for various instance types in the aws-vpc-cni repo.

So for an c5.2xlarge , max pods will be:

4 ENI * 14 usable IP per ENI + 2 = 58Tuning for Low Pod / Large Instance Workloads

To recap the problem I was seeing, we had 1 or 2 Gitlab runner job pods running on large instances. These instances where only running <15 minutes before being terminated. How could we avoid the over-allocation (waste) of IPs for this kind of workload?

WARM_ENI_TARGET = 0

Remember the default value is 1, meaning the primary and a secondary ENI will be allocated with IPs.

Reasoning: If we only expect 2 pods to ever be run on an ec2 instance, then the primary ENI has enough IPs to satisfy the requirements without allocating a second ENI.

WARM_IP_TARGET = 5

Recall that this setting is the number of warm (unused) IPs to keep before an additional ENI is created. The goal of setting this value is to avoid triggering an additional ENI allocation.

Example: Consider an instance with 1 ENI, each ENI supporting 20 IP addresses. WARM_IP_TARGET is set to 5. WARM_ENI_TARGET is set to 0. Only 1 ENI will be attached until a 16th IP address is needed. Then, the CNI will attach a second ENI, consuming 20 possible addresses from the subnet CIDR.

Reasoning: A setting of 5 is will not likely cause a new ENI to be allocated for the instance types that we use in the cluster. It was important to set some value here, since the maximum number of IPs per ENI would be used as the default. In other words, by default, as soon as 1 IP is allocated to a pod, an additional ENI will be attached and “warmed”.

MINIMUM_IP_TARGET = 5

Remember that if MINIMUM_IP_TARGET is not set, it will default to 0, which causes WARM_IP_TARGET settings to be the only settings considered.

Reasoning: I set this to the same value as WARM_IP_TARGET explicitly, just to avoid any ambiguity.

Summary

If you are a bit confused at this point, don’t worry, you are not alone. Take some time to look at the code and repo docs.

Please remember that this tuning was performed to address a specific use case – you SHOULD NOT change these settings unless you understand the implications.

Also bear in mind that changing these settings will affect all nodes in the cluster (assuming you use one aws-vpc-cni deployment). You could negatively impact some parts of the cluster if you have different usage patterns among worker groups within the same cluster.

For my issue, I was dealing with short lived nodes with only 1-2 pods running on each. These tunings drastically reduced how many IPs were used from the subnet. Remember that once allocated from the subnet, IPs may take time to be “released” after the ec2 is terminated so that those IPs can be reused.

By the way, if IPs cannot be allocated to your ec2 instance, the error in CloudTrail might look something like this:

"eventSource": "ec2.amazonaws.com",

"eventName": "CreateNetworkInterface",

"userAgent": "aws-sdk-go/1.33.14 amazon-vpc-cni-k8s"

"errorMessage": "insufficient free address to allocate 1 address"Proper Planning is the Best Medicine

Tuning is good, but proper planning is better.

When designing your Kubernetes cluster, ensure the network can accommodate the anticipated amount of pods.

In the case of AWS EKS clusters, I recommend creating dedicated subnets for worker nodes to avoid the risk of starving other resources in your VPC of IP addresses.

In my case, I also put the Gitlab runner nodes in a dedicated subnet to avoid running out of IPs for other worker groups in case the number of Gitlab runner pods goes out of control.

If you do run low on IP capacity, or want to ensure a high number of available IPs from the start, remember AWS VPC and EKS support additional CIDR blocks.

Of course, if we had switched to IPv6 by now, we probably wouldn’t need to worry about IP exhaustion 🤣.

I hope this clarifies some of the AWS VPC CNI settings. Thanks for reading.