I’m a big fan of ArgoCD – and I’m a big fan of Helmfile. In this article I will describe how to create an ArgoCD Configuration Management Plugin (CMP) for deploying helm charts using helmfile.

If you use ArgoCD for deploying to Kubernetes, you’ll know that ArgoCD has built-in support for helm, jsonnet, kustomize, and of course raw Kuberntes manifests. For other deployment methods, you’ll need to create a CMP.

Helmfile is – to put it simply – a wrapper for helm that allows you to manage multiple helm charts with one command. It also offers several ways to pass values to helm charts, including the use of golang template functions.

At my job we’ve been using helmfile with gitlab CI/CD before we introduced ArgoCD. So when we migrated deployments to ArgoCD, we didn’t want to give up the features we liked about helmfile, or do major refactoring of the repos.

Advantages of Helmfile with ArgoCD

- ArgoCD now supports remote helm charts with values from a git repo, but for a while there were some hacks that needed to be done. Helmfile already abstracts the logic to fetch remote charts and pass local values files.

- Golang templates – the sprig library as well as other gotemplate constructs can be used, allowing more advanced logic in templates.

- Use of environment variables – you can use environment variables as values or in conditional logic – something that is not easily done in plain helm.

- Keep code DRY – helmfile can merge multiple values files. This means you can have a set of values that are common between different environments, with the option to override these values with more specific settings. This greatly reduces copy&paste and makes chart installations easier to maintain.

- One centralized file – the helmfile.yaml contains all helm charts, versions, and environments. You can even use includes to organize charts by team.

- Passing secrets – by leveraging the helm-secrets plugin and the val tool, helmfile can handle fetching, decrypting, and passing secrets (vault, sops, ssm) to charts.

Have I convinced you? Then read on …

Configuring the CMP

As mentioned in the official docs, Configuration Management Plugins in ArgoCD run as a sidecar in the ArgoCD repo-server pod.

The first step is to create the CMP config file. By the way – the ConfigManagementPlugin may look like a Kubernetes object, but it is not actually a custom resource. It only follows kubernetes-style spec conventions.

ArgoCD plugins have three sections that can be customized: init, generate, and discover.

- init – always happens immediately before generate. It is useful for downloading dependencies, for example.

- generate – this is when manifests are generated to standard output. The standard output must be valid Kubernetes Objects in either YAML or JSON.

- discover – this is used by ArgoCD to automatically determine what CMP to use. E.g, detecting if a file named “helmfile.yaml” exists in the git repo.

By default, ArgoCD expects the plugin configuration file to be located at /home/argocd/cmp-server/config/plugin.yaml

Here’s my configuration. Note that rather than trying to format the commands directly in the configuration yaml, I put all the logic in a separate bash script named argocd-helmfile.sh. I find this more readable. Because we build our own CMP docker image, it was easy to add this script to the image.

apiVersion: argoproj.io/v1alpha1

kind: ConfigManagementPlugin

metadata:

name: helmfile

spec:

version: v1.0

init:

command: ["argocd-helmfile.sh"]

args: ["init"]

generate:

command: ["argocd-helmfile.sh"]

args: ["generate"]

discover:

fileName: "./helmfile*.y*ml"

Note that the arguments “init” and “generate” are passed to the script for each phase. The discover configuration will allow ArgoCD to figure out what CMP to use, since there could potentially be several CMPs configured. Here we specify the pattern “./helmfile*.y*ml” to allow more flexibility with the name of the helmfile config file.

The contents of the script argocd-helmfile.sh are as follows:

#!/bin/bash

set -e

echoerr() { printf "%s\n" "$*" >&2; }

# exit immediately if no phase is passed in

if [[ -z "${1}" ]]; then

echoerr "invalid invocation"

exit 1

fi

while IFS='=' read -r -d '' n v; do

if [[ "$n" = ARGOCD_ENV_* ]]; then

export ${n##ARGOCD_ENV_}="$v"

fi

done < <(env -0)

phase=$1

case $phase in

"init")

echoerr "starting init"

helmfile repos --quiet --file "${HELMFILE_FILE:-helmfile.yaml}"

helmfile deps --quiet --file "${HELMFILE_FILE:-helmfile.yaml}"

;;

"generate")

echoerr "starting generate"

if [[ -n "$HELMFILE_RELEASE_NAME" ]]; then

helmfile template --quiet --environment "${HELMFILE_ENVIRONMENT:-default}" \

--file "${HELMFILE_FILE:-helmfile.yaml}" \

--selector name="$HELMFILE_RELEASE_NAME" \

--include-crds --skip-deps --skip-tests

else

helmfile template --quiet --environment "${HELMFILE_ENVIRONMENT:-default}" \

--file "${HELMFILE_FILE:-helmfile.yaml}" \

--include-crds --skip-deps --skip-tests

fi

;;

*)

echoerr "invalid invocation"

exit 1

;;

esac

Some explanation of the argocd-helmfile.sh script:

- Since helmfile is designed to manage multiple helm charts with one command, you will need to decide how you wish to model the ArgoCD application. The argocd-helmfile.sh can handle both situations:

- Abstract all helm charts managed by helmfile in a single ArgoCD application

- Manage charts individually, each with its own ArgoCD application by passing a value for

HELMFILE_RELEASE_NAME. This will pass the--selectorparameter with release name tohelmfile template.

- The init case triggers helmfile to refresh all remote repos and fetch dependencies.

- An important note about using environment variables in CMPs. The commands and scripts used in a CMP have access to 3 sources of environment variables:

- The system environment variables of the sidecar

- Build environment variables made available by ArgoCD

- Variables in the ArgoCD Application spec. Be aware that ArgoCD will prefix all env variables with

ARGOCD_ENV_. This is to avoid mistakenly overwriting any sensitive operating system variables. As a convenience, this script removes theARGOCD_ENV_prefix so variables can be used as they were originally defined in the ArgoCD application spec.

- The variable

HELMFILE_FILEallows you to use a different helmfile config file – for examplehelmfile-sres.yaml - The variable HELMFILE_ENVIRONMENT allows you to specify the helmfile environment. Useful when you have different environments (e.g., staging, production)

- ArgoCD Application parameters are not processed by the script. Most helm values rarely change and are defined in the values files inside the same git repo as the helmfile. This keeps things simple.

Of course, your script could look very different depending on your use-case. This one works for us.

Building the sidecar image

I won’t share the complete Dockerfile, only sections relevant to this discussion.

Notes

- Installing the helm-secrets plugin and sops are not required if you do not encrypt any chart values files with sops.

- The helm command is required since helmfile uses helm to generate manifests from charts.

other comments inline

FROM ubuntu:22.04

# set versions at the top for better visibility

ARG HELM_VERSION="v3.10.1"

ARG HELMFILE_VERSION="0.150.0"

ARG HELM_SECRETS_VERSION="4.2.2"

# create the argocd user

RUN set -eux; \

groupadd --gid 999 argocd; \

useradd --uid 999 --gid argocd -m argocd;

RUN OS=$(uname | tr '[:upper:]' '[:lower:]') && \

ARCH=$(uname -m | sed -e 's/x86_64/amd64/' -e 's/\(arm\)\(64\)\?.*/\1\2/' -e 's/aarch64$/arm64/') && \

curl --show-error --silent -L -o /usr/local/bin/sops https://github.com/mozilla/sops/releases/download/${SOPS_VERSION}/sops-${SOPS_VERSION}.${OS}.${ARCH} && \

chmod +x /usr/local/bin/sops && \

curl -L -O https://get.helm.sh/helm-${HELM_VERSION}-${OS}-${ARCH}.tar.gz && \

tar zxvf "helm-${HELM_VERSION}-${OS}-${ARCH}.tar.gz" && \

mv ${OS}-${ARCH}/helm /usr/local/bin/helm && \

chmod +x /usr/local/bin/helm && \

rm -rf ${OS}-${ARCH} helm-${HELM_VERSION}-${OS}-${ARCH}.tar.gz && \

curl --show-error --silent -L -O https://github.com/helmfile/helmfile/releases/download/v${HELMFILE_VERSION}/helmfile_${HELMFILE_VERSION}_${OS}_${ARCH}.tar.gz && \

tar zxvf "helmfile_${HELMFILE_VERSION}_${OS}_${ARCH}.tar.gz" && \

mv ./helmfile /usr/local/bin/ && \

rm -f helmfile_${HELMFILE_VERSION}_${OS}_${ARCH}.tar.gz README.md LICENSE

# copy the script to a valid directory in PATH

COPY --link argocd-helmfile.sh /usr/local/bin/argocd-helmfile.sh

RUN chmod +x /usr/local/bin/argocd-helmfile.sh

USER 999

RUN helm plugin install https://github.com/jkroepke/helm-secrets --version ${HELM_SECRETS_VERSION}

ENV HELM_PLUGINS="/home/argocd/.local/share/helm/plugins/"

# ArgoCD plugin config copied to the expected location

WORKDIR /home/argocd/cmp-server/config/

COPY --link plugin.yaml .

Adding the CMP sidecar

One of the final steps is to add the sidecar the the ArgoCD repo-server. The way to do this depends on how you installed ArgoCD in the first place. Since we use the official ArgoCD helm chart to install ArgoCD itself, I will describe adding the config to the chart’s values.yaml.

Note: The ArgoCD helm chart provides a dedicated “cmp" section to add CMP configurations. However, because we are building our own CMP sidecar image with plugin configuration and scripts already baked in, we will configure the CMP sidecar under “extraContainers“.

extraContainers:

- name: cmp

command: ["/var/run/argocd/argocd-cmp-server"]

image: <SPECIFY URL OF YOUR CMP SERVER IMAGE HERE>

imagePullPolicy: Always

env:

- name: HELM_CACHE_HOME

value: /helm-working-dir

- name: HELM_CONFIG_HOME

value: /helm-working-dir

- name: HELM_DATA_HOME

value: /helm-working-dir

securityContext:

runAsNonRoot: true

runAsUser: 999

volumeMounts:

- mountPath: /var/run/argocd

name: var-argocd

- mountPath: /home/argocd/cmp-server/plugins

name: plugins

- mountPath: /tmp

name: cmp-tmp

- name: helm-temp-dir

mountPath: /helm-working-dirNotice the container command /var/run/argocd/argocd-cmp-server. This program does not need to be installed on the CMP sidecar image. Since this program is already installed on repo-server running in the same pod, it is much easier (and safer) to reuse the binary from the repo-server container – this will ensure the versions of the ArgoCD components stay in sync.

To reuse the argocd-cmp-server binary, we use a shared volume (“/var/run/argocd“) on the CMP sidecar and on the ArgoCD repo-server container.

The general pattern looks like this:

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: main-container

image: main-image

volumeMounts:

- name: var-argocd

mountPath: /var/run/argocd

- name: sidecar-container

image: sidecar-image

volumeMounts:

- name: var-argocd

mountPath: /var/run/argocd

volumes:

- name: var-argocd

emptyDir: {}

Using the helmfile CMP

Let’s imagine a hypothetical git repo that uses helmfile to manage an installation of the NGINX Ingress Controller.

The repo layout might look like this:

helmfile.yaml

values/staging/values.yaml

values/production/values.yaml

releases/nginx-ingress-controller/values.gotmplThe contents of helmfile.yaml:

repositories:

- name: nginx-stable

url: https://helm.nginx.com/stable

environments:

staging:

values:

- values/staging/values.yaml

secrets:

- values/staging/secrets.yaml

production:

values:

- values/production/values.yaml

secrets:

- values/production/secrets.yaml

---

releases:

- name: nginx-ingress-controller

namespace: nginx-ingress

chart: nginx-stable/nginx-ingress

version: 0.17.1

missingFileHandler: Error

values:

- releases/nginx-ingress-controller/values.gotmpl

set:

- name: controller.image.tag

value: 3.2.1

I’m not going into details about the contents of the values files since they are not relevant to this discussion.

First, let’s create the app for our staging environment.

Creating the ArgoCD application using the argocd-cli:

argocd --grpc-web app create "nginx-ingress-controller" \

--repo "<your repo git url here>" \

--dest-name "my-kubernetes-cluster" \

--dest-namespace "nginx-ingress" \

--project "nginx-ingress-controller-staging" \

--sync-option CreateNamespace=true \

--plugin-env HELMFILE_ENVIRONMENT="staging"Notice that the --plugin-env parameter is used to pass the environment variable HELMFILE_ENVIRONMENT="staging". This will be used by the argocd-helmfile.sh script to pass the correct environment to helmfile.

Using the declarative approach:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: nginx-ingress-controller

namespace: argocd

spec:

project: nginx-ingress-controller-staging

source:

repoURL: https://<your repo git url here>.git

plugin:

name: helmfile # DEPRECATED. Discovery rules will be used exclusively in the future

env:

- name: HELMFILE_ENVIRONMENT

value: staging

destination:

name: my-kubernetes-cluster

namespace: nginx-ingressRemember, ArgoCD manifests usually get created in the argocd namespace, not in the namespace where you will deploy your application.

Updating the application image tag

Most values for a chart such as nginx-ingress-controller rarely change. But what if you use helmfile to deploy a custom application where you need to update the image tag with every release?

If you follow the common practice of using the git commit hash as the image tag, then this is easy. The commit hash is passed to CMPs as part of the build environment variables.

The variable ARGOCD_APP_REVISION will contain the commit hash. Again, you do not need to pass this explicitly – it is automatically passed by ArgoCD.

To use this as a value in helmfile, you can take advantage of built-in helmfile function requiredEnv:

releases:

- name: my-app

namespace: my-namespace

version: 1.0.0

chart: some-chart

missingFileHandler: Error

set:

- name: deployment.image.tag

value: {{ requiredEnv "ARGOCD_APP_REVISION" | substr 0 8 }}

When helmfile is executed, it will render the variable ARGOCD_APP_REVISION, taking the first 8 characters.

Putting everything together, your complete pipeline would typically perform the following tasks:

- test the app

- build the app docker image, tagging it with the commit hash (or partial hash)

- push the docker image to your image registry

- ArgoCD generates and applies the app’s manifests to Kubernetes

- The Kubernetes Deployment contains the image tagged with the current commit hash.

Moving from helm/helmfile to ArgoCD

If you have previously used helm/helmfile to manage charts outside of ArgoCD, it is worth remembering that ArgoCD does not run “helm install” or “helmfile apply“. ArgoCD uses helm/helmfile only as a tool to generate the Kubernetes manifests – in other words, it runs “helm template” or “helmfile template“.

This means that helm state is no longer managed inside the cluster (using secrets). The state of the installed helm chart is now managed by ArgoCD.

Therefore, after moving to ArgoCD, you may wish to carefully remove the helm secrets containing the helm states of previous deployments before migration to ArgoCD.

Conclusion

I hope this article gives you some insights and demystifies ArgoCD Configuration Management Plugins. CMPs are a great feature of ArgoCD, allowing you to use tools for rendering manifests that are not natively supported.

If you are interested in more articles on ArgoCD, let me know.

Question: I’m curious – what was the main reason (main benefits) to switch from Helmfile + Gitlab CICD to ArgoCD?

Hi Boris. First of all, congratulations on being my very first comment on this blog. Thanks for reading it 🙂

There were a few motivations for switching from Gitlab to ArgoCD:

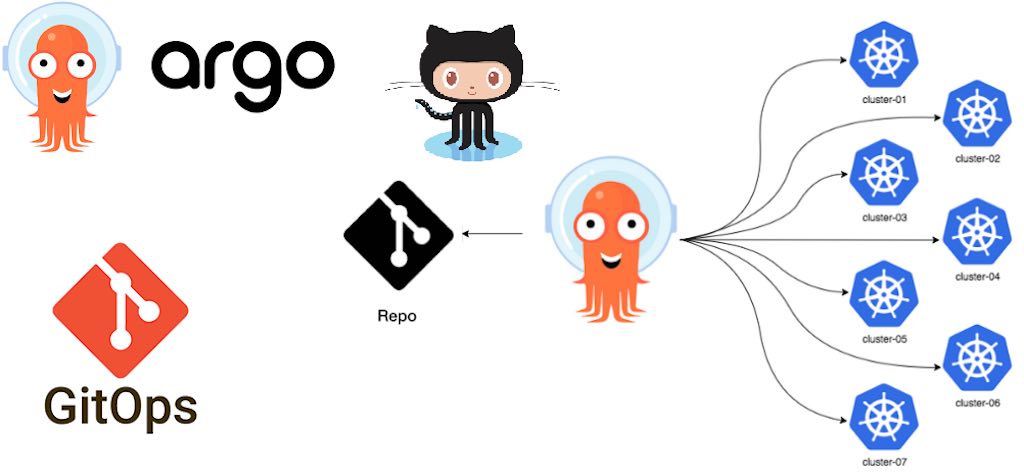

* We wanted to adopt a more GitOps strategy, where the git repo is the source of truth and changes to the repo will trigger a sync without triggering gitlab pipelines.

* We wanted to avoid the complexity (and security risks) of managing credentials for the gitlab pipeline to access kubernetes clusters.

* We liked the features of ArgoCD UI for viewing logs, diffs and manifests and its ability to do rollbacks and store history.

* We wanted to offload the deployment logic to ArgoCD. Instead of managing complex job templates to deploy to kubernetes in different ways, we wanted to take advantage of ArgoCD’s built-in constructs. It’s a more centralized approach.

Managing ArgoCD does add some overhead, but managing gitlab runners also requires maintenance.

I hope this answers your question. Thanks again for your interest.

Thank you for the answer Jonathan! Nice post btw 🙂